PinchPad: Performance of Touch-Based Gestures while Grasping Devices

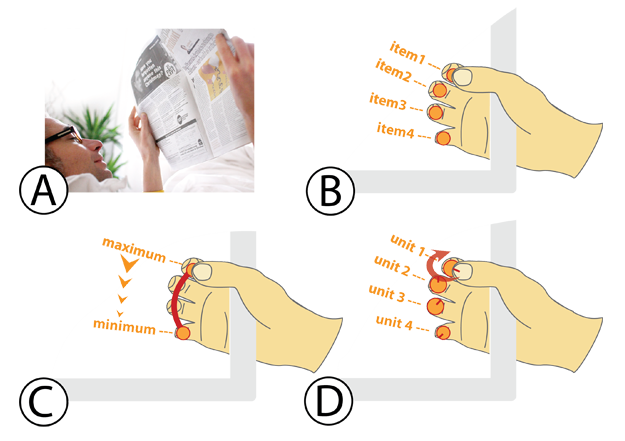

This paper focuses on combining front and back device interaction on grasped devices, using touch-based gestures. We designed generic interactions for discrete, continuous, and combined gesture commands that are executed without hand-eye control because the performing fingers are hidden behind a grasped device. We designed the interactions in such a way that the thumb can always be used as a proprioceptive reference for guiding finger movements, applying embodied knowledge about body structure. In a user study, we tested these touch-based interactions for their performance and users’ task-load perception. We combined two iPads together back-to-back to form a double-sided touch screen device: the PinchPad. We discuss the main errors that led to a decrease in accuracy, identify stable features that reduce the error rate, and discuss the role of ‘body schema’ in designing gesture-based interactions where the user cannot see their hands properly.

This project was a collaboration with Christian Müller-Tomfelde and Kelvin Cheng, and Ina Wechsung.