-

Real-time Intersensory Discomfort Compensation, DFG

Funded by the DFG (Priority Program SPP 2199)Duration: 2024-2027 Principal InvestigatorsProf. Dr.-Ing. Katrin Wolf, Berlin University of Applied Sciences and TechnologyProf. Dr. Prof. Dr. Albrecht Schmidt, LMU Munich Sensory illusions are the basis for believable mixed reality experiences. Visual illusions, such as animated images, have already been well-researched. However, the illusions that are essential for […]

-

Manipulation of virtual self-perception through visual-haptic avatar parameters, DFG

Funded by the DFG (Priority Program SPP 2199)Duration: 2024-2027 Principal InvestigatorsProf. Dr. Niels Henze, University of RegensburgProf. Dr.-Ing. Katrin Wolf, Berlin University of Applied Sciences and Technology User representations in interactive systems are essential for developing effective, efficient, and satisfactory tools. In mixed reality, this representation is called an avatar and describes virtual characters through […]

-

Pervasive Touch, DFG

Methods for Designing Robust Microgestures for Touch-based Interaction Funded by the DFG Duration: 2023-2026 Principal Investigators Prof. Dr. Niels Henze, University of Regensburg Prof. Dr.-Ing. Katrin Wolf, Berlin University of Applied Sciences and Technology Current smart objects are mainly controlled through smart speakers or mobile devices. While this can be advantageous for some smart objects, […]

-

HapticIO, BMBF

Virtual tool that feel real Funded by the BMBF Duration: 2021-2024 Project partners Berlin University of Applied Sciences and Technology Intuity Media Lab Konstrktiv GmbH TH Ingolstadt University of Regensburg Motivation With digital tools, you hardly use the previous knowledge from working with their analogue counterparts. Complex manual actions are not possible with […]

-

WINK, BMBF

Recognize hand movements – gesture control using an intelligent bracelet Funded by the BMBF Duration: 2020-2024 Project partners Berlin University of Applied Sciences and Technology Kinemic GmbH, Karlsruhe tech-solute Industriedienstleistungen für die technische Produktinnovation GmbH & Co. KG, Bruchsal Motivation Digital technology is increasingly being integrated into everyone’s everyday life – whether in […]

-

Illusionary Surface Interfaces, DFG

Funded by the DFG (Priority Program SPP 2199) Duration: 2020-2024 Principal Investigators Prof. Dr.-Ing Katrin Wolf, Berlin University of Applied Sciences and Technology Prof. Dr. Albrecht Schmidt, LMU Munich Illusionary Surface Interfaces is a research project within the Scalable Interaction Paradigms for Pervasive Computing Environments (SPP2199). The project aims to investigate how haptic […]

-

KIMRA, LFF

Artistic Methods in Mixed Reality Exhibition Design Funded by the LFF Hamburg Duration: 2019-2022 Project-related publications Takashi Goto, Swagata Das, Pedro Lopes, Yuichi Kurita, Kai Kunze and Katrin Wolf. Accelerating Skill Acquisition of Two-Handed Drumming using Pneumatic Artificial Muscles. In Proceedings of the Augmented Humans (AHs) International Conference 2020 Niels Wouters, Ryan M […]

-

GEVAKUB, BMBF

Design guidelines for virtual exhibition spaces for cultural education Funded by the BMBF Duration: 2017-2020 Project partners Berlin University of Applied Sciences and Technology University of Bayreuth University of Regensburg In the joint project GEVAKUB, the University of Regensburg, the Berlin University of Applied Sciences and Technology, and the University of Bayreuth investigated […]

-

Architecture prototyping with VR

Even though, three-dimensional representations of architectural models exist, experiencing these models like one would experience a fully constructed building is still a major challenge. With Virtual Reality (VR) it is now possible to experience a number of scenarios in a virtual environment. Also prototyping interactive architecture elements, which might be very expensive, becomes possible. […]

-

Lifelog Video Retrieval

With the advent of lifelogging cameras the amount of personal video material is massively growing to an extent that easily overwhelms the user. To efficiently review lifelog data, we need well designed video navigation tools. In this project, we analyze which cues are most beneficial for lifelog video navigation. We show that the information […]

-

Proxemic Zones of Exhibits

We investigate intimate proxemic zones of exhibits, which are zones that visitor will respect and hence not enter. In a first experiment we show that exhibits have, like humans, an intimate proxemic zone that is respected by exhibition visitors and which is 27cm. A zone around an exhibit that visitors will not enter can be […]

-

Paper TUI

Tangible user interfaces (TUIs) have been proposed to interact with digital information through physical objects. However being investigated since decades, TUIs still play a marginal role compared to other UI paradigms. This is at least partially because TUIs often involve complex hardware elements, which make prototyping and production in quantities difficult and expensive. In […]

-

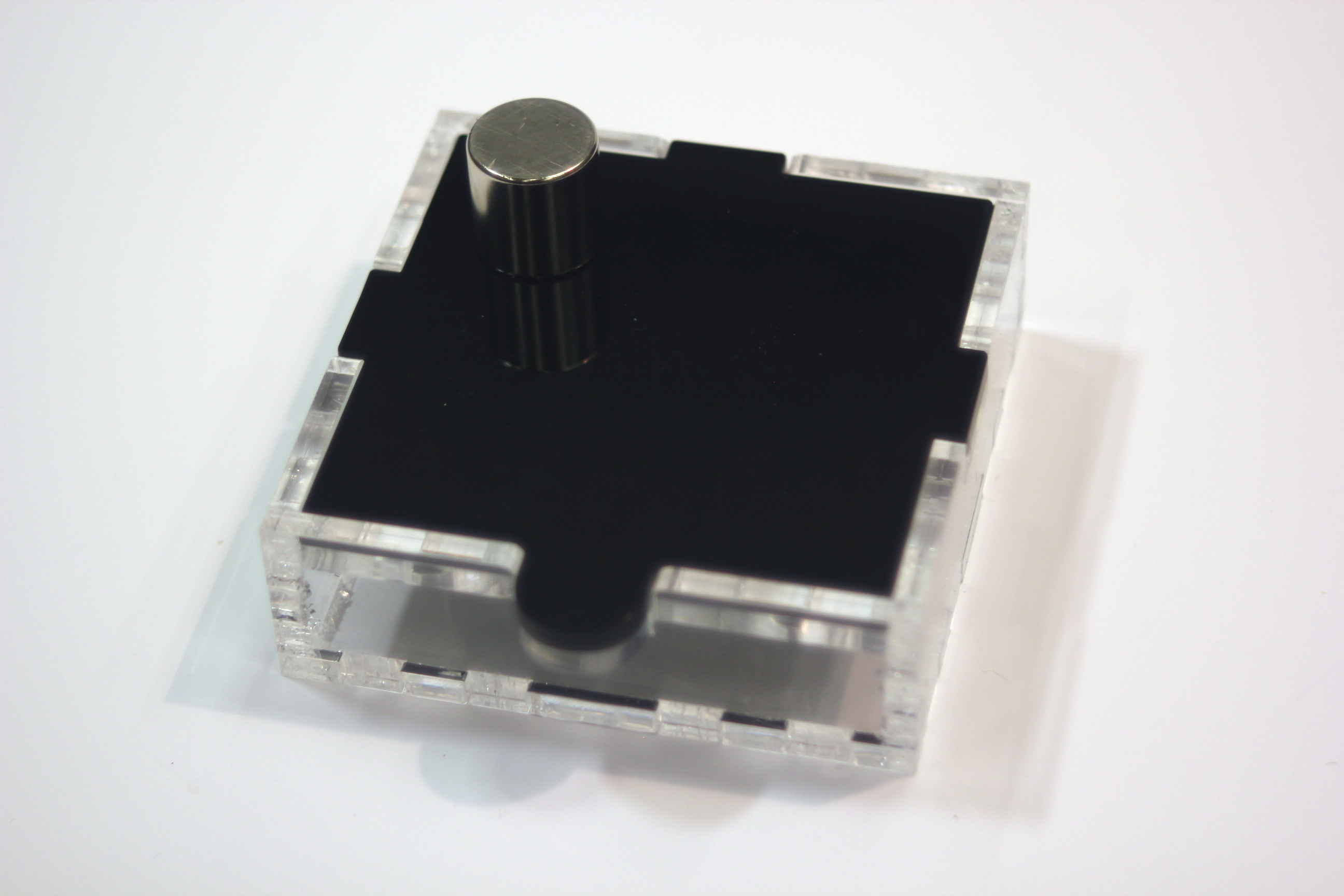

Surface Illusions

The work presented here aims to enrich material perception when touching interactive surfaces. This is realized through simulating changes in the perception of various material properties, such as softness and bendability. The thereby created perceptual illusions of surface changes are induced using electrotactile stimuli and texture projection as touch/pressure feedback. A metal plate with […]

-

Pick-Ring

We are frequently switching between devices, and currently we have to unlock most of them. Ideally such devices should be seamlessly accessible and not require an unlock action. We introduce PickRing, a wearable sensor that allows seamless interaction with devices through predicting the intention to interact with them through the device’s pick-up detection. A […]

-

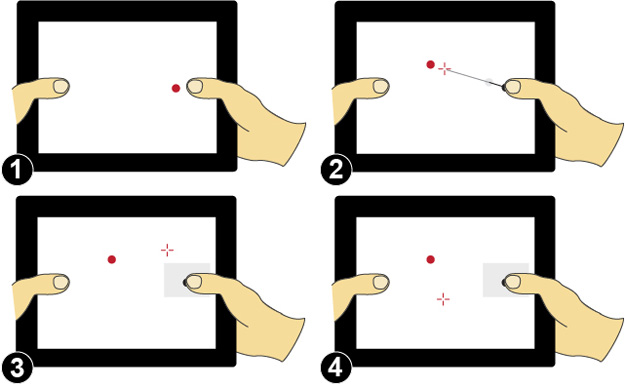

Tablet Interaction with Grasping Hands, dissertation

Tablet Interaction with Grasping Hands was my dissertation topic that I investigated at T-Labs at the Technical University Berlin. I developed guidelines for a future device type: a tablet that allows ergonomic front- and back-of-device interaction. These guidelines are derived from empirical studies and developed to fit the users’ skills to the way the […]

-

FUI: Feelable User Interfaces

Tangible User Interfaces (TUIs) represent digital information via a number of sensory modalities including the haptic, visual and auditory senses. We suggest that interaction with tangible interfaces is commonly governed primarily through visual cues, despite the emphasis on tangible representation. We do not doubt that visual feedback offers rich interaction guidance, but argue that […]

-

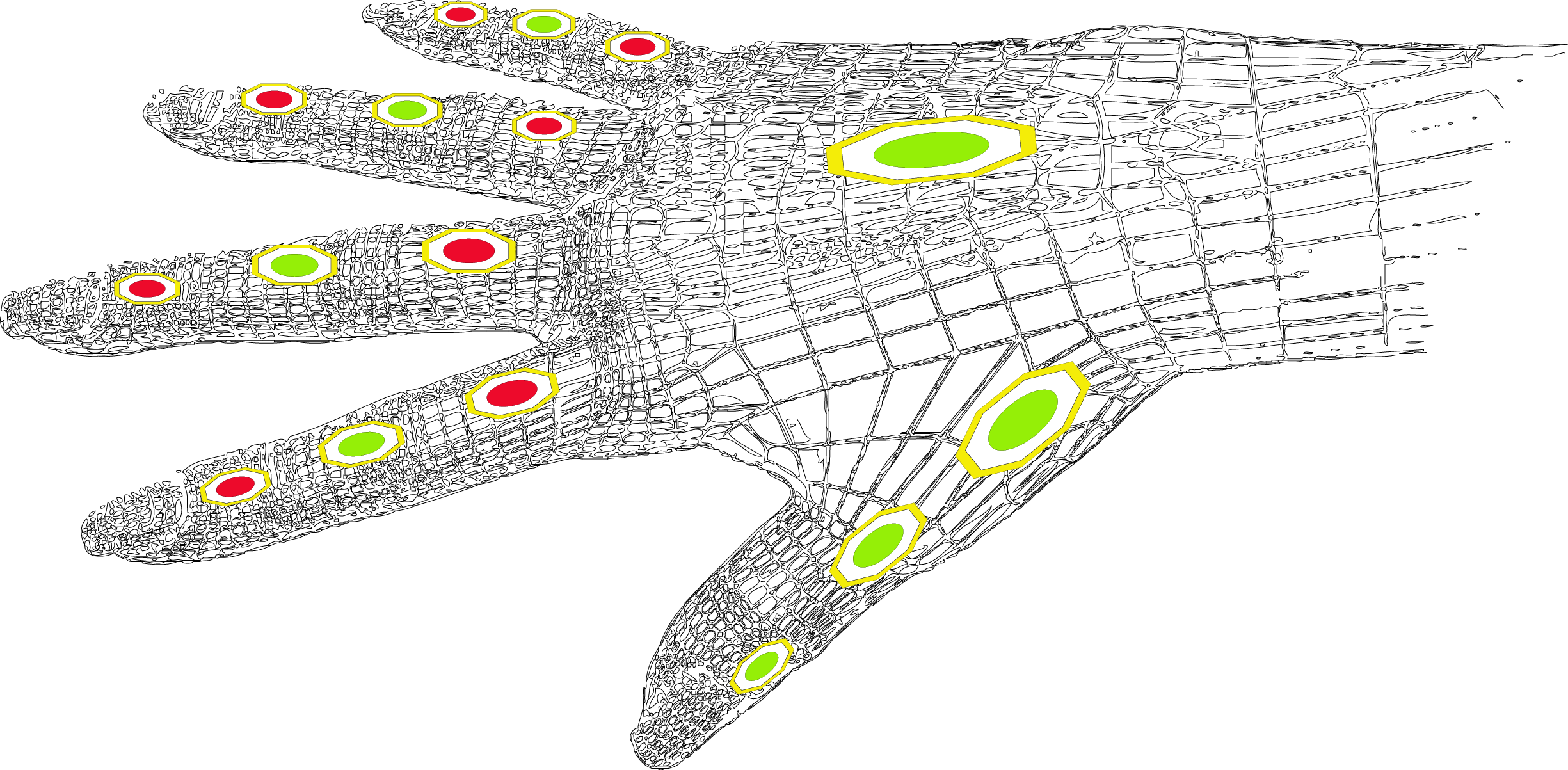

Whole Hand Modeling using 8 Wearable Sensors

Although Data Gloves allow for the modeling of the human hand, they can lead to a reduction in usability as they cover the entire hand and limit the sense of touch as well as reducing hand feasibility. As modeling the whole hand has many advantages (e.g. for complex gesture detection) we aim for modeling […]

-

Tickle: A Surface-independent Interaction Technique for Grasp Interfaces

We present a wearable interface that consists of motion sensors. As the interface can be worn on the user’s fingers (as a ring) or fixed to it (with nail polish), the device controlled by finger gestures can be any generic object, provided they have an interface for receiving the sensor’s signal. We implemented four […]

-

Flaw: seamless microinteractions for enriched drawing experience

This demonstration was presented at World Haptics Conference 2011. We showed a finger worn accelerometer interface for modifying the stroke attributes while drawing on a touch surface. We support input through finger touch and pen. In example, a scroll movement with the index finger (or pen) changes the stroke width, and shaking the hand […]

-

A Study of On-Device Gestures

Regardless of how gestural phone interaction (like pinching on a touch screen for content zooming) is implemented in almost any mobile device; there are still no design guidelines for gestural control. These should be designed with respect to ergonomics and hand anatomy. There are many human-side aspects to take care of when designing gestures. […]

-

PinchPad: Performance of Touch-Based Gestures while Grasping Devices

This paper focuses on combining front and back device interaction on grasped devices, using touch-based gestures. We designed generic interactions for discrete, continuous, and combined gesture commands that are executed without hand-eye control because the performing fingers are hidden behind a grasped device. We designed the interactions in such a way that the thumb […]

-

Taxonomy of microinteractions: defining microgestures based on ergonomic and scenario-dependent requirements

This paper explores how microgestures can allow us to execute a secondary task, for example controlling mobile applications, without interrupting the manual primary task, for instance, driving a car. In order to design microgestures iteratively, we interviewed sports- and physiotherapists while asking them to use task related props, such as a steering wheel, […]

-

Touching the Void: Gestures for Auditory Interfaces

Nowadays, mobile devices provide new possibilities for gesture interaction due to the large range of embedded sensors they have and their physical form factor. In addition, auditory interfaces can now be more easily supported through advanced mobile computing capabilities. Although different types of gesture techniques have been proposed for handheld devices, there is still […]

-

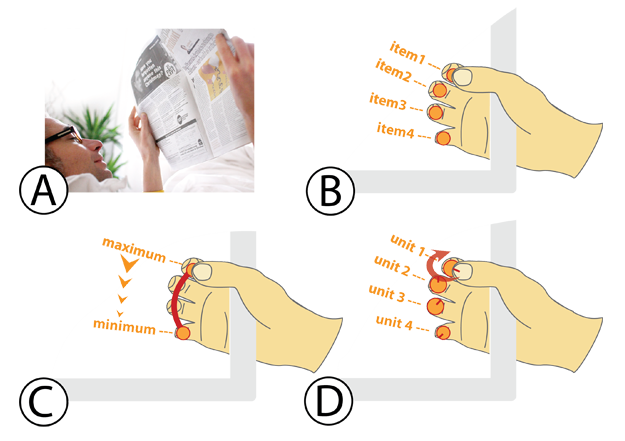

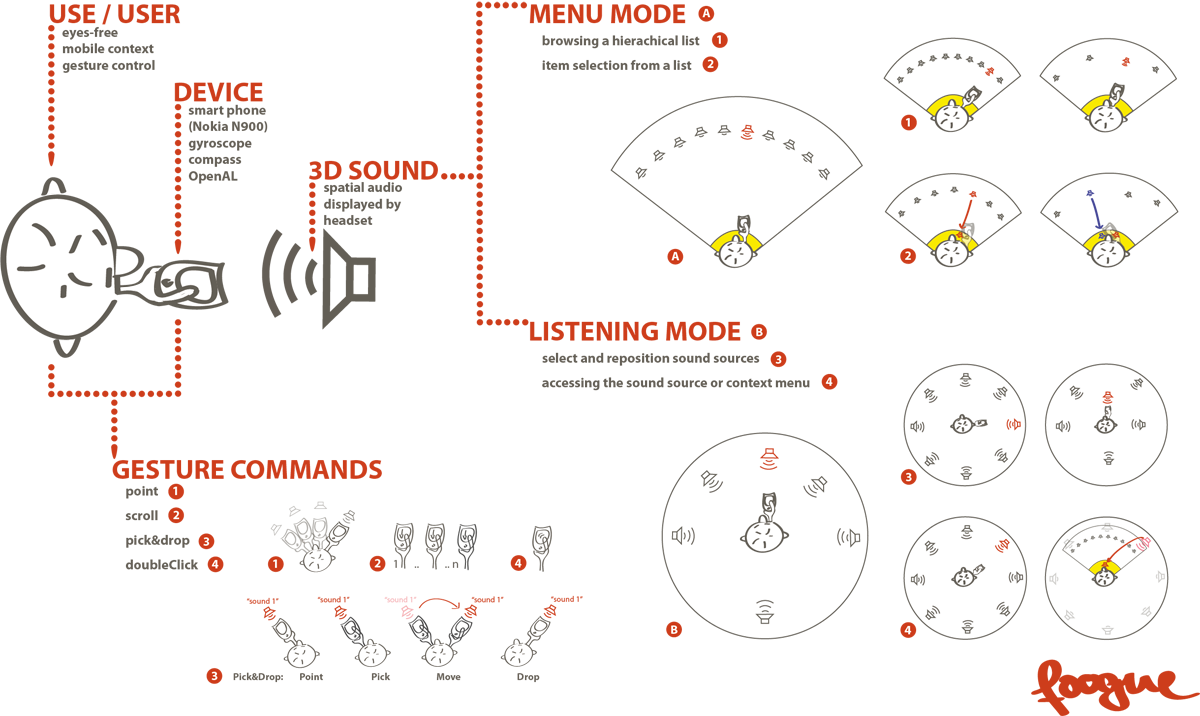

Foogue: Eyes-Free Interaction for Smartphones

Graphical user interfaces for mobile devices have several drawbacks in mobile situations. In this paper, we present Foogue, an eyes-free interface that utilizes spatial audio and gesture input. Foogue does not require visual attention and hence does not divert visual attention from the task at hand. Foogue has two modes, which are designed to fit […]